Suyuchen Wang (王苏羽晨)

Ph.D. Candidate, Computer Science

About Me

Suyuchen Wang is a Ph.D. candidate at Mila, Quebec AI Institute, focusing on advancing Natural Language Processing and Large Language Models. His research includes exploring efficient long sequence modeling techniques and enhancing the performance of LLMs through innovative approaches. He collaborates under the supervision of Bang Liu and has a significant publication track record in major AI conferences.

Interests

- Artificial Intelligence

- Natural Language Processing

- Large Language Models

- Long Sequence Modeling

- Multimodal Large Models

Education

Ph.D., Computer Science

Mila - Quebec AI Institute / Université de Montréal

B.Eng. (Hons.), Computer Science

Beihang University

Looking for Jobs!

I am seeking Research Engineer or Research Scientist roles specializing in Long Sequence LLMs, Multimodal LLMs, and Agentic LLMs, with availability starting in early 2026. Feel free to connect with me via email or LinkedIn.

Featured Publications

Recent Publications

(2025).

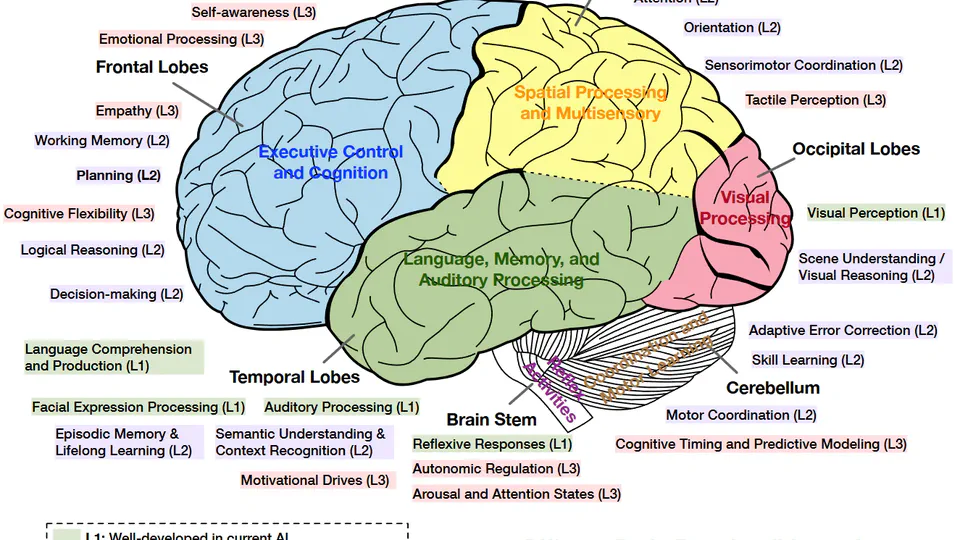

Advances and Challenges in Foundation Agents: From Brain-Inspired Intelligence to Evolutionary, Collaborative, and Safe Systems.

arXiv preprint arXiv: 2504.01990.

(2025).

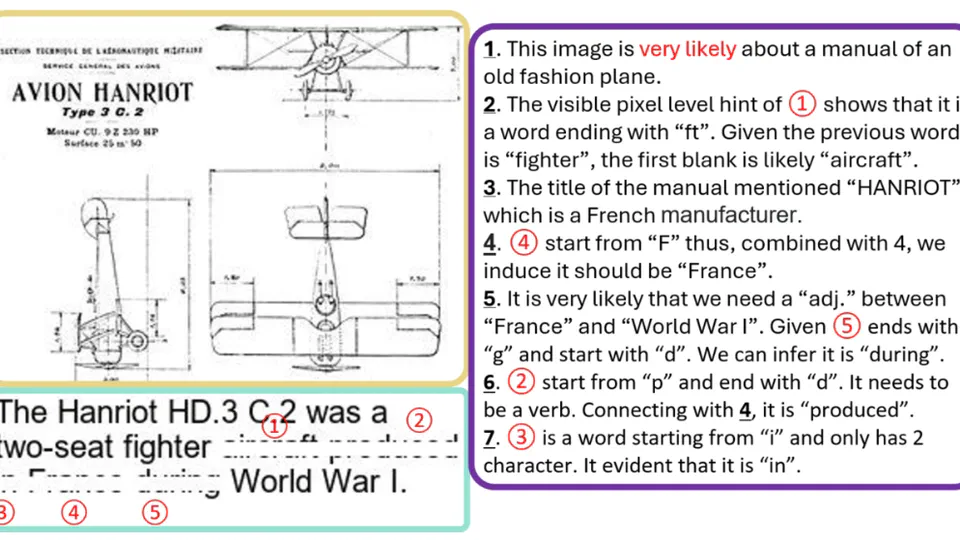

BigDocs: An Open Dataset for Training Multimodal Models on Document and Code Tasks.

The Thirteenth International Conference on Learning Representations (ICLR 2025).

(2025).

GraphOmni: A Comprehensive and Extendable Benchmark Framework for Large Language Models on Graph-theoretic Tasks.

arXiv preprint arXiv: 2504.12764.